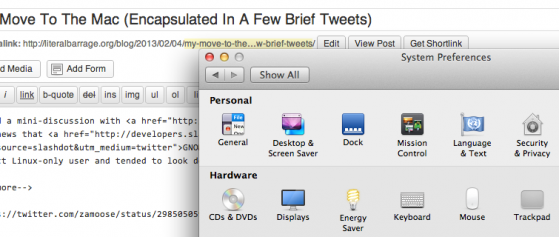

My Move To The Mac (Encapsulated In A Few Brief Tweets)

I had a mini-discussion with Neil Stevens about the genesis of my Mac use, sparked by the news that GNOME is moving to JavaScript as its primary development language. I used to be a strict Linux-only user and tended to…